In our menu above, under “AVM Information” we always have the latest version of our testing schedule. 2025 AVM Validation Testing Dates have been published there as of today.

Study: AVMetrics’ Predictive Testing Methodology

This content is restricted to subscribers

Introducing PTM™ – Revolutionizing AVM Testing for Accurate Property Valuations

When it comes to residential property valuation, Automated Valuation Models (AVMs) have a lurking problem. AVM testing is broken and has been for some time, which means that we don’t really know how much we can or should rely on AVMs for accurate valuations.

Testing AVMs seems straightforward: take the AVM’s estimate and compare it to an arm’s length market transaction. The approach is theoretically sound and widely agreed upon but unfortunately no longer possible.

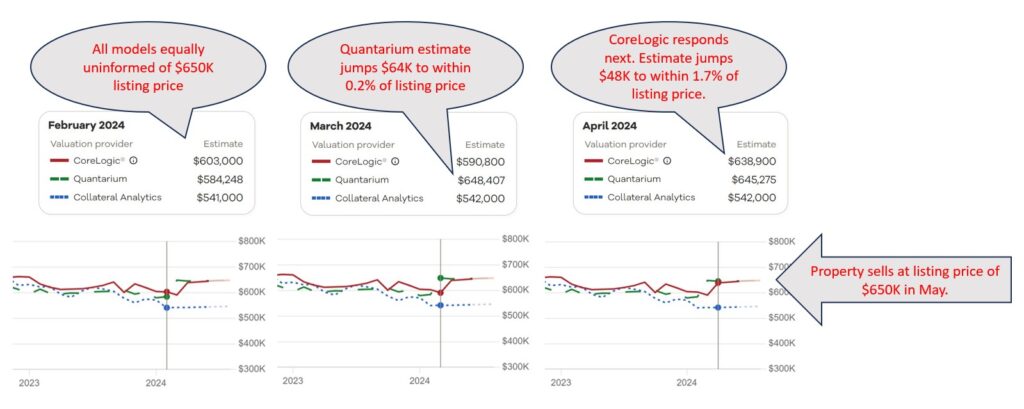

Once you see the problem, you cannot unsee it. The issue lies in the fact that most, if not all, AVMs have access to multiple listing data, including property listing prices. Studies have shown that many AVMs anchor their predictions to these listing prices. While this makes them more accurate when they have listing data, it casts serious doubt on their ability to accurately assess property values in the absence of that information.

All this opens up the question: what do we want to use AVMs for? If all we want is to get a good estimate of what price a sale will close at, once we know the listing price, then they are great. However, if the idea is to get an objective estimate of the property’s likely market value to refinance a mortgage or to calculate equity or to measure default risk, then they are… well, it’s hard to say. Current testing methodology can’t determine how accurate they are.

But there is promise on the horizon. After five years of meticulous development and collaboration with vendors/models, AVMetrics is proud to unveil our game-changing Predictive Testing Methodology (PTM™), designed specifically to circumvent the problem that is invalidating all current testing. AVMetrics’ new approach will replace the current methods cluttering the landscape and finally provide a realistic view of AVMs’ predictive capabilities.1

At the heart of PTM™ lies our extensive Model Repository Database (MRD™), housing predictions from every participating AVM for every residential property in the United States – an astonishing 100 to 120 million properties per AVM. With monthly refreshes, this database houses more than a billion records per model and thereby offers unparalleled insights into AVM performance over time.

But tracking historical estimates at massive scale wasn’t enough. To address the influence of listing prices on AVM predictions, we’ve integrated a national MLS database into our methodology. By pinpointing the moment when AVMs gained visibility into listing prices, we can assess predictions for sold properties just before this information influenced the models, which is the key to isolating confirmation bias. While the concept may seem straightforward, the execution is anything but. PTM™ navigates a complex web of factors to ensure a level playing field for all models involved, setting a new standard for AVM testing.

So, how do we restore confidence in AVMs? With PTM™, we’re enabling accurate AVM testing, which in turn paves the way for more accurate property valuations. Those, in turn, empower stakeholders to make informed decisions with confidence. Join us in revolutionizing AVM testing and moving into the future of improved property valuation accuracy. Together, we can unlock new possibilities and drive meaningful change in the industry.

1The majority of the commercially available AVMs support this testing methodology, and there is over two solid years of testing that has been conducted for over 25 models.

Feds to Lenders: Take AVMs Seriously

Regulators are signaling that they are going to be looking at how AVMs are used and whether lenders have appropriately tested them and continuously monitor them for valuation discrimination. This represents a change in the focus on AVMs and the need for all lenders to focus on AVM validation to avoid unfavorable attention from government regulators.

On Feb 12, the FFIEC issued a statement on examinations from regulators. It specifically stated that it didn’t represent a change in principles, nor a change in guidance, and not even a change in focus. It was just a friendly announcement about the exam process, which will focus on whether institutions can identify and mitigate bias in residential property valuations.

Law firm Husch Blackwell published their interpretation a week later. Their analysis included consideration of the June 2023 FFIEC statement on the proposed AVM quality control rule, which would include bias as a “fifth factor” when evaluating AVMs. They interpret these different announcements as part of a theme, an extended signal to the industry that all valuations, and AVMs in particular, are going to receive additional scrutiny. Whether that is because bias is as important as quality or because being unbiased is an inherent aspect of quality, the subject of bias is drawing attention, but the result will be a thorough examination of all practices around valuation, including AVMs, from oversight to validation, training, auditing, etc.

AVM quality has theoretically been an issue that could be enforced by regulators in some circumstances for over a decade. What we’re seeing is not just an expansion from accuracy into questions of bias. We’re also seeing an expansion from banks into all lenders, including non-bank lenders. And, they are signaling that examinations will focus on bias, which is an expansion from the theoretical requirement to an actual, manifest, serious requirement.

AVM Testing Schedule Announced for 2024

In our menu above, under “AVM Information” we always have the latest version of our testing schedule. 2024 AVM Validation Testing Dates have been published there as of today.

Our Perspective on Brookings’ AVM Whitepaper

As the publisher of the AVMNews, we felt compelled to respond to the Brookings’ very thorough whitepaper on AVMs (Automated Valuation Models) published on October 12, 2023, and share our thoughts on the recommendations and insights presented therein.

First and foremost, I would like to acknowledge the thoroughness and dedication with which Brookings conducted their research. Their whitepaper contains valuable observations, clear explanations and wise recommendations that unsurprisingly align with our own perspective on AVMs.

Here’s our stance on key points from Brookings’ whitepaper:

- Expanding Public Transparency: We wholeheartedly support increased transparency in the AVM industry. In fact, Lee’s recent service on the TAF IAC AVM Task Force led to a report recommending greater transparency measures. Transparency not only fosters trust but also enhances the overall reliability of AVMs.

- Disclosing More Information to Affected Individuals: We are strong advocates for disclosing AVM accuracy and precision measures to the public. Lee’s second Task Force report also recommended the implementation of a universal AVM confidence score. This kind of information empowers individuals with a clearer understanding of AVM results.

- Guaranteeing Evaluations Are Independent: Ensuring the independence of evaluations is paramount. Compliance with this existing requirement should be non-negotiable, and we fully support this recommendation.

- Encouraging the Search for Less Discriminatory AVMs: Promoting the development and use of less discriminatory AVMs aligns with our goals. We view this as a straightforward step toward fairer AVM practices.

Regarding Brookings’ additional points 5, 6, and 7, we find them to be aspirational but not necessarily practical in the current landscape. In the case of #6, regulating Zillow, it appears that existing and proposed regulations adequately cover entities like Zillow, provided they use AVMs in lending.

While we appreciate the depth of Brookings’ research, we would like to address a few misconceptions within their paper:

- Lender Grade vs. Platform AVMs: We firmly believe that there is a distinction between lender-grade and platform AVMs, as evidenced by our testing and assessments. Variations exist not only between AVM providers but also within the different levels of AVMs offered by a single provider.

- “AVM Evaluators… Are Not Demonstrably Informing the Public:” We take exception to this statement. We actively contribute to public knowledge through articles, analyses, newsletters (AVMNews and our State of AVMs), quarterly GIF, a comprehensive Glossary, and participation in industry groups, task forces. We also serve the public by making AVM education available, and we would have been more than willing to collaborate or consult with Brookings during their research.

But, we’re obligated not to just give away our analysis or publish it. Our partners in the industry provide us their value estimates and we provide our analysis back to them. It’s a major way in which they improve, because they’re able to see 1) an independent test of accuracy, and 2) a comparison to other AVMs. They can see where they’re being beaten, which means opportunity for improvement. But, in order to participate, they require some confidentiality to protect their IP and reputation.

We should comment on the concept of independence that Brookings emphasized. Independent evaluation is exceedingly important in our opinion, as the only independent AVM evaluator. Brookings mentioned in passing that Mercury is not independent, but they also mentioned Fitch as an independent evaluator. We agree with Brookings that a vendor who also sells, builds, resells, uses or advocates for certain AVMs may be biased (or may appear to be biased) in auditing them; validation must be able to “effectively challenge” the models being tested.

We do not believe Fitch satisfies ongoing independent testing, validation and documentation of testing which requires resources with the competencies and influences to effectively challenge AVM models. Current guidelines require validation to be performed in real-world conditions, to be ongoing, and to be reported on at least annually. When there are changes to the models, the business environment or the marketplace, the models need to be re-validated.

Fitch’s assessment of AVM providers is focused on each vendor’s model testing results, review of management and staff experience, data sourcing, technology effectiveness and quality control procedures. Fitch’s methodology of relying on analyses obtained from the AVM providers’ model testing results would not categorize them as an “independent AVM evaluator,” as reliance on testing done by the AVM providers themselves does not meet any definition of “independent” per existing regulatory guidance. AVMetrics is in no way beholden to the AVM developers or the resellers in any way; we draw no income from selling, developing, or using AVM products.

For almost two decades, we have continued to test AVMs against hundreds of thousands (sometimes millions) of transactions per quarter and use a variety of techniques to level the playing field between AVMs. We provide detailed and transparent statistical summaries and insights to our newsletter readers, and we publish charts that give insights into the depth and thoroughness of our analysis, whereas we have not observed this from other testing entities. Our research spanning eighteen years shows that even overall good-preforming models are less reliable in certain circumstances, so one of the less obvious risks that we would highlight is reliance on a “good” model that is poor in a specific geography, price level or property type. Models should be tested in each one of these subcategories in order to assess their reliability and risk profile. Identifying “reliable models” isn’t straightforward. Performance varies over time as market conditions change and models are tweaked. Performance also varies between locations, so a model that is extremely reliable overall may not be effective in a specific region. Furthermore, models that are effective overall may not be effective at all price levels, for example: low-priced entry-level homes or high-priced homes. Finally, very effective models will also produce estimates that they admit have lower confidence scores (and higher FSDs), and which should in all prudence be avoided, but without adequate testing and understanding may be inadvertently relied upon. Proper testing and controls can mitigate these problems.

Regarding cascades, the Brookings’ paper leans on cascades as an important part of the solution for less discriminatory AVMs. We agree with Brookings: a cascade is the most sophisticated way to use AVMs. It maximizes accuracy and minimizes forecast error and risk. By subscribing to multiple AVMs, you can rank-order them to choose the highest performing AVM for each situation, which we call using a Model Preference Table™. The best possible AVM selection approach is a cascade, which combines that MPT™ with business logic to define when an AVM’s response is acceptable and when it should be set aside for the next AVM or another form of valuation. The business logic can incorporate the Forecast Standard Deviation provided by the model and the institution’s own risk-tolerance to determine when a value estimate is acceptable.

Mark Sennott (industry insider) recently published a whitepaper describing current issues with cascades, namely that some AVM resellers will give favorable positions to AVMs based on favors, pricing or other factors that do NOT include performance as evaluated by independent firms like AVMetrics. This goes to the additional transparency for which Brookings’ advocates. We’re all in favor.

We actually see a strong parallel between Mark Sennott’s whitepaper and the Brookings’ paper. Brookings makes the case to regulators, whereas Sennott was speaking to the AVM industry, but both of them argue for more transparency and responsible leadership by the industry. Sennott appears to be very prescient, in retrospect.

In order to ensure that adequate testing is done regularly we recommend that a control be implemented to create transparency around how the GSE’s or other originators are performing their testing. This could be done in a variety of ways. One method might require the GSE or lending institution to indicate their last AVM testing date on each appraisal waiver. Regardless of how it’s done, the goal would be to create a mechanism that would increase commitment to appropriate testing. The GSE’s could provide a leadership role by demonstrating how they would like lending institutions to demonstrate their independent AVM testing as required by OCC 2010-42 and 2011-12.

In conclusion, we appreciate Brookings’ dedication to asking questions and providing perspective on the AVM industry. We share their goals for transparency, fairness, and accuracy. We believe that open dialogue and collaboration by all the valuation industry participants are the keys to advancing the responsible use of AVMs.

We look forward to continuing our contributions to the AVM community and working toward a brighter future for this essential technology.

Why Mark Sennott’s Whitepaper Stopped Us Cold

At AVMetrics, we have to admit having mixed feelings about Mark Sennott’s recent whitepaper on AVMs. We’re quite grateful for his praise on our testing, which he describes as “robust, methodical and truly independent.” He echoes some of our key concerns:

- AVMs perform very differently, so it is important to test before using

- AVM performance changes more frequently than you’d think

- Everyone should employ a cascade using multiple AVMs, because it dramatically increases the accuracy of the delivered results.

However, there was something quite disconcerting in Mark’s telling of how AVMs are being used. In Mark’s words:

In practice, however, the top performing AVMs, based on independent testing performed by companies like AVMetrics, are not always the ones being delivered to lenders. The reason: self-interest on the part of the AVM delivery platforms who also sell and promote their own AVMs.

This very troubling delta between posture and operating practice had to be confronted first-hand by one of the lenders for which I provide guidance. What at first blush appeared as a straightforward exercise for the lender in vetting a platform provider’s cascade against AVMetrics independent testing results, became a ponderous journey to overcome contractual headwinds against a simple assurance the provider would indeed provide the highest scoring AVM model per AVMetrics recommendations. This was not the first time I experienced this apparent conflict of interest.

Kudos to Mark for writing openly about a practice that many in the industry would probably prefer that he kept quiet about.

2023 Testing Schedule Announced

AVMetics’ ongoing independent AVM testing continues in 2023 according to the schedule in the link below (updated as of January 3, 2023). Please download the pdf with the revised 2023 testing schedule.

Four Points to Consider Before Outsourcing AVM Validation

AVMs are not only fairly accurate, they are also affordable and easy to use. Unfortunately, using them in a “compliant” fashion is not as easy. Regulatory Bulletins OCC 2010-42 and OCC 2011-12 describe a lot of requirements that can be challenging for a regional or community institution:

- ongoing independent testing and validation and documentation of testing;

- understanding each AVM model’s conceptual and methodological soundness;

- documenting policies and procedures that define how to use AVMs and when not to use AVMs;

- establishing targets for accuracy and tolerances for acceptable discrepancies.

The extent to which these requirements are applied by your regulator is most likely proportional to the extent to which AVMs are used within your organization; if AVMs are used extensively, regulatory oversight will likely demand much tighter adherence to the requirements as well as much more comprehensive policies and procedures.

Although compliance itself is not a function that can be outsourced (it is the sole responsibility of the institution), elements of the regulatory requirements can be effectively handled outside the organization through outsourcing. As an example, the first bullet point, “ongoing independent testing and validation and documentation of testing,” requires resources with the competencies and influences to effectively challenge AVM models. In addition, the “independent” aspect is challenging to accomplish unless a separate department within the institution is established that does not report up through the product and/or procurement verticals (e.g. similar to Audit, or Model Risk Management, etc.). Whether your institution is a heavy AVM user or not, the good news is that finding the right third-party to outsource to will facilitate all of the bullet points above:

- documentation is included as part of an independent testing and validation process and it can be incorporated into your policies and procedures;

- the results of the testing will help you shape your understanding of where and when AVMs can and cannot be used;

- the results of the testing will inform your decisions regarding the accuracy and performance thresholds that fit within your institution’s risk appetite. In addition,

- an outsourced specialist may also be able to provide various levels of consultation assistance in areas where you may not have the internal expertise.

Before deciding whether outsourcing makes sense for you, here are some potential considerations. If you can answer “no” to all of these questions, then outsourcing might be a good option, especially if you don’t have an independent Analytics unit in-house that has the resource bandwidth to accommodate the AVM testing and validation processes:

- Is this process strategically critical? I.e., does your validation of AVMs benefit you competitively in a tangible way?

- If your validation of AVMs is inadequate, can this substantially affect your reputation or your position within the marketplace?

- Is outsourcing impractical for any reason? I.e., are there other business functions that preclude separating the validation process?

- Does your institution have the same data availability and economies of scale as a specialist?

The Way Forward

Here are some suggestions on how to go about preparing yourself for selecting your outsource partner:

- Specify what you need outsourced. If you already have Policies and Procedures documented and processes in place, there may be no need to look for that capability, but there will necessarily still be the need to incorporate any testing and validation results into your existing policies and procedures. If you have previously done extensive evaluations of the AVMs that you use, in terms of their models’ conceptual soundness and outcomes analysis, there’s no need to contract for that, either. See our article on Regulatory Oversight to get some ideas about those requirements.

- Identify possible partners, such as AVMetrics, and evaluate their fit. Here’s what to look for:

- Expertise. It’s a technical job, requiring a fair amount of analysis and a tremendous amount of knowledge about regulatory requirements in general, and specifically knowledge relative to AVMs; check the résumés of the experts with whom you plan to partner.

- Independence. A vendor who also sells, builds, resells, uses or advocates for certain AVMs may be biased (or may appear to be biased) in auditing them; validation must be able to “effectively challenge” the models being tested.

- Track record. Stable partners are better, and a long term relationship lowers the cost of outsourcing; so look for a partner with a successful track record in performing AVM validations.

- Open up conversations with potential partners early because the process can take months, particularly if policies and procedures need to be developed; although validations can be successfully completed in a matter of days, that is not the norm.

- Make sure your staff has enough familiarity with the regulatory requirements so as to be able to oversee the vendor’s work; remember that the responsibility for compliance is ultimately on you. Make sure the vendor’s process and results are clearly and comprehensively documented and then ensure that Internal Audit and Compliance are part of that oversight. “Outsource” doesn’t mean “forget about it;” thorough and complete understanding and documentation is part of the requirements.

- Have a plan for ongoing compliance, whether it is to transition to internal resources or to retain vendors indefinitely. Set expectations for the frequency of the validation process, which regulations require to be at least annually or more often, commensurate with the extent of your AVM usage.

In Conclusion

AVM testing and validation is only one component in your overall Valuation and evaluation program. Unlike Appraisals and some other forms of collateral valuation, AVMs, by their nature as a quantitative predictive model, lend themselves to just the type of statistically-based outcomes analysis the regulators set forth. Recognizing this, elements of the requirements can be an outsourced process, but it must be a compliment to enterprise-wide policies and practices around the permissible, safe and prudent use of valuation tools and technologies.

The process of validating and documenting AVMs may seem daunting at first, but for the past 10 years AVMetrics has been providing ease-of-mind for our customers, whether as the sole source of an outsourced testing and validation process (that tests every commercial AVM four times a year), or as a partner in transitioning the process in-house. Our experience, professional resources and depth of data have enabled us to standardize much of the processing while still providing the customization every institution needs. And probably one of the most critical boxes you can check off when outsourcing with AVMetrics is the very large one that requires independence. It also bears mentioning that having been around as long as we have, our customers have generally all been through at least one round of regulatory scrutiny, and the AVMetrics process has always passed regulatory muster. Regulatory reviews already present enough of a challenge, so having a partner with established credentials is critical for a smooth process.

2020 Testing Schedule Announced

AVMetics’ ongoing independent AVM testing continues in 2020 according to the schedule in the link below. Please download the pdf with the entire 2020 testing schedule.