AVMetics’ ongoing independent AVM testing continues in 2023 according to the schedule in the link below (updated as of January 3, 2023). Please download the pdf with the revised 2023 testing schedule.

Honors for the #1 AVM Changes Hands in Q3

We’ve got the update for Q3 2022. Our top AVM GIF shows the #1 AVM in each county going back 8 quarters. This graphic demonstrates why we never recommend using a single AVM. Again, there are 19 AVMs in the most recent quarter that are “tops” in at least one county!

The expert approach is to use a Model Preference Table® to identify the best AVM in each region. (Actually, our MPT® typically identifies the top 3 AVMs in each county.) Or, you could use a cascade to tap into the best AVM for whatever your application.

This time, the Seattle area and the Los Angeles region stayed light blue, just like the previous quarter. But, most of the populous counties in Northern California changed hands. Sacramento was the exception, but Santa Clara, Alameda, Contra Costa, San Mateo and some smaller counties like Calaveras (which means “skulls”) changed sweaters. Together they account for 6 million northern Californians who just got a new champion AVM.

A number of rural states changed hands almost completely… again. New Mexico, Wyoming, North Dakota, South Dakota, Montana and Nebraska as well as Arkansas, Mississippi, Alabama and rural Georgia crowned different champions for most counties. I could go on.

All that goes to show the importance of using multiple AVMs and getting intelligence on how accurate and precise each AVM is.

Honors for the #1 AVM Changes Hands in Q2

We’ve got the update for Q2 2022. Our top AVM GIF shows the #1 AVM in each county going back 8 quarters. This graphic demonstrates why we never recommend using a single AVM. There are 19 AVMs in the most recent quarter that are “tops” in at least one county (one more than in Q1)!

The expert approach is to use a Model Preference Table® to identify the best AVM in each region. (Actually, our MPT® typically identifies the top 3 AVMs in each county.)

One great example is the Seattle area. Over the last two years, you would need seven AVMs to cover the most populous 5 counties of the Seattle environs with the best AVM. What’s more, the King’s County champion AVM has included 3 different AVMs.

A number of rural states changed hands almost completely. New Mexico, Wyoming, North Dakota, South Dakota, Montana and Kansas crowned different champions for most counties.

All that goes to show the importance of using multiple AVMs and getting intelligence on how accurate and precise each AVM is.

AVM Regulation – Twists and Turns to Get Here

The Era of Full Steam Ahead!

Six months before the pandemic, we published an article on the outlook for regulation related to AVMs. At the time, we identified three trends.

- The administration was encouraging more use of AVMs (e.g., via hybrids), and tempering that with calls for close monitoring of AVMs.

- The de minimis threshold change foreshadowed an increase in reliance on AVMs in some lower value mortgages.

- The Appraisal Subcommittee summit was focused on standardization across agencies and alternative valuation products, namely, AVMs. Conversation focused on quality and risk as well as speed.

We saw those trends pointing to increased AVM use balanced by a focus on risk, quality and efficiency.

Sure enough, the following events unfolded:

- The de minimis threshold was indeed raised, right before the pandemic changed everything.

- The appraisal business was turned upside down for a period during the pandemic.

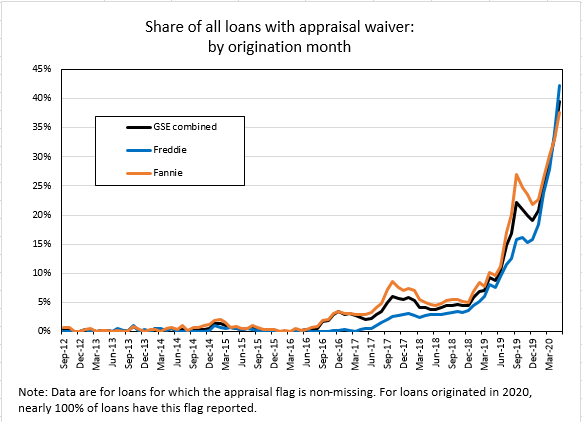

- Property Inspection Waivers (PIWs) took off in a big way as Fannie and Freddie skipped appraisals on a huge percentages of their originations (up to 40% at times).

Halt! About Face!

And then the new administration changed the focus entirely. No longer were the conversations about speed, efficiency, quality, risk and appraisers being focused on their highest and best use. Instead, conversations focused on bias.

Fannie produced a report on bias in appraisals. CFPB began moving on new AVM guidelines and proposed using the “fifth factor” to measure Fair Lending implications for AVMs. Congress held committee hearings on AVM bias.

New Direction

Then The Appraisal Foundation’s Industry Advisory Council produced an AVM Task Force Report. Two of AVMetrics’ staff participated on the task force and helped present its findings recently in Washington D.C.

The Task Force made specific recommendations, but first it helped educate regulators about the AVM industry.

One specific recommendation was to consider certification for AVMs. Another was to use the same USPAP framework for the oversight of AVMs as is used for the oversight of appraisals. It’s all laid out in the AVM Task Force Report.

Taking It All In

Our assessment three years ago was eerily accurate for the subsequent two years. Even the unexpected pandemic generally moved things in the direction that we were pointing to: increased use of AVMs through hybrids.

What we failed to anticipate back then was a complete change in direction with the new administration, and maybe that’s to be expected. It’s hard to see around the corner to a new administration, with new personnel, priorities and policy objectives.

The Task Force Report provides some very practical direction for regulations. But the recent emphasis on fair lending, which emerged after the Task Force began meeting and forming its recommendations, could influence the direction of things. The end result is a combination of more clarity and, at the same time, new uncertainty.

Honors for the #1 AVM Changes Hands

We’ve updated our Top AVM GIF showing the #1 AVM in each county going back 8 quarters. This graphic demonstrates why we never recommend using a single AVM. There are 18 AVMs in the most recent quarter that are “tops” in at least one county!

The expert approach is to use a Model Preference Table to identify the best AVM in each region. (Actually, our MPT® typically identifies the top 3 AVMs in each county.)

Take the Seattle area for example. Over the last two years, you would almost always need two or three AVMs to cover the most populous 5 counties of the Seattle environs with the best AVM. However, it’s not always the same two or three. There are four of them that cycle through the top spots.

Texas is dominated by either Model A, Model P or Model Q. But that domination is really just a reflection of the vast areas of sparsely inhabited counties. The densely populated counties in the triangle from Dallas south along I-35 to San Antonio and then east along I-10 to Houston cycle through different colors every quarter. The bottom line in Texas is that there’s no single model that is best in Texas for more than a quarter, and typically, it would require four or five models to cover the populous counties effectively.

Demystifying home pricing models with Lee Kennedy

Earlier this year, Lee Kennedy appeared with Matthew Blake on the HousingWire Daily podcast, Houses in Motion:

They covered a number of topics in valuations, from iBuying to AVMs, including:

- Democratizing the treasure trove of appraisal data that Fannie Mae maintains

- The inputs into AVMs

- What fraction of the housing market can effectively be valued by AVMs

- How to use multiple AVMs effectively

- What complexities Zillow was dealing with in their iBuying endeavor

How AVMetrics Tests AVMs Using our New Testing Methodology

Testing an AVM’s accuracy can actually be quite tricky. You might think that you simply compare an AVM valuation to a corresponding actual sales price – technically a fair sale on the open market – but that’s just the beginning. Here’s why it’s hard:

- You need to get those matching values and benchmark sales in large quantities – like hundreds of thousands – if you want to cover the whole nation and be able to test different price ranges and property types (AVMetrics compiled close to 4 million valid benchmarks in 2021).

- You need to scrub out foreclosure sales and other bad benchmarks.

- And perhaps most difficult, you need to test the AVMs’ valuations BEFORE the corresponding benchmark sale is made public. If you don’t, then the AVM builders, whose business is up-to-date data, will incorporate that price information into their models and essentially invalidate the test. (You can’t really have a test where the subject knows the answer ahead of time.)

Here’s a secret about that third part: some of the AVM builders are also the same companies that are the premier providers of real estate data, including MLS data. What if the models are using MLS data listing price feeds to “anchor” their models based on the listing price of a home? If they are the source of the data, how can you test them before they get the data? We now know how.

We have spent years developing and implementing a solution because we wanted to level the playing field for every AVM builder and model. We ask each AVM to value every home in America each month. They each provide +/-110 million AVM valuations each month. There are over 25 different commercially available AVMs that we test regularly. That adds up to a lot of data.

A few years ago, it wouldn’t have been feasible to accumulate data at that scale. But now that computing and storage costs make it feasible, the AVM builders themselves are enthusiastic about it. They like the idea of a fair and square competition. We now have valuations for every property BEFORE it’s sold, and in fact, before it’s listed.

As we have for well over a decade now, we gather actual sales to use as the benchmarks against which to measure the accuracy of the AVMs. We scrub these actual sales prices to ensure that they are for arm’s-length transactions between willing buyers and sellers — the best and most reliable indicator of market value. Then we use proprietary algorithms to match benchmark values to the most recent usable AVM estimated value. Using our massive database, we ensure that each model has the same opportunity to predict the sales price of each benchmark.

AVMetrics next performs a variety of statistical analyses on the results, breaking down each individual market, each price range, and each property type, and develops results which characterize each model’s success in terms of precision, usability, error and accuracy. AVMetrics analyzes trends at the global, market and individual model levels. We also identify where there are strengths and weaknesses and where performance improved or declined.

In the spirit of continuous improvement, AVMetrics provides each model builder an anonymized comprehensive comparative analysis showing where their models stack up against all of the models in the test; this invaluable information facilitates their ongoing efforts to improve their models.

Finally, in addition to quantitative testing, AVMetrics circulates a comprehensive vendor questionnaire semi-annually. Vendors that wish to participate in the testing process answer roughly 100 parameter, data, methodology, staffing and internal testing questions for each model being tested. These enable AVMetrics and our clients to understand model differences within both testing and production contexts. The questionnaire also enables us and our clients to satisfy certain regulatory requirements describing the evaluation and selection of models (see OCC 2010-42 and 2011-12).

AVMetrics Responds to FHFA on New Appraisal Practices

FHFA, the oversight agency for Fannie Mae and Freddie Mac, published a Request for Input on December 28, 2020. The RFI covered Appraisal-Related Policies, Practices and Processes. AVMetrics put forth a response including several pages and several exhibits making the case for using AVMs responsibly and effectively in a Model Preference Table®. Here is the Executive Summary:

The lynchpin to many of the appraisal alternatives is an Automated Valuation Model, a subject which AVMetrics has studied assiduously and relentlessly for more than 15 years. We point out that even an excellent AVM can be improved by the use of a Model Preference Table. MPTs enable better accuracy, fewer “no hits” and fewer overvaluations.

We also suggest an escalated focus on AVM testing, and we use our own research and citations of OCC Interagency Guidelines to emphasize the importance of testing to effectively use AVMs. We suggest that an “FSD Analysis” like the one we describe reduces risk by avoiding higher risk circumstances for using an AVM.

We suggest that the implementation of a universal MPT by the Enterprises will improve the collateral tools available and reduce the risk of manipulation by lenders. We also believe that a universal MPT can help redeploy appraisers to their highest and best use: the qualitative aspects of appraisal work. Our suggestion is that the GSEs endeavor to make the increased use of AVMs a benefit to appraisers, increasing their value-added and bringing them along in the transition.

AVMetrics’ full response is available here:

Property Inspection Waivers Took Off After the Pandemic Set In

Appraisals are the gold standard when it comes to valuing residential real estate, but they aren’t always necessary. They’re expensive and time-consuming, and in the era of COVID-19, they’re inconvenient. What’s the alternative?

Well, Fannie and Freddie implemented a “Property Inspection Waiver” (PIW) alternative more than a decade ago. However, it’s been slow to catch on.

But now, maybe the tipping point has arrived during the pandemic. Recently published data by Fannie and Freddie show approximately 33% of properties were valued without a traditional appraisal! (Most, if not all, would have used an AVM as part of the appraisal waiver process.) Ed Pinto at AEI’s Housing Center calls it a hockey stick.

So, what changed? Here are some thoughts and hypotheses:

- Guidelines changed a little. We can see in the data that Freddie did almost zero PIWs on cash out loans, but in May that changed, and at lease for LTVS below 70%, they did almost 15,000 cash out loans with no appraisal.

- AVMs changed. Back when PIWs were introduced, AVMs operated in a +/- 10% paradigm. They were more concerned with hit rates than anything else, and they worked best on track homes. But, today they are operating in a +/- 4% world, hit rates are great, and cascades allow lenders to pick the AVM that’s most accurate for the application.

- Borrowers changed. These days, borrowers have grown up with online tools that give them answers. They are more likely to read about their symptoms on WebMD before going to the doctor, and they are more likely to look their home up on Zillow before calling their realtor. In the past, if home was purchased with a low LTV, who was it that required an appraisal? Typically, it was borrowers that wanted the appraisal – more as a safety blanket than anything else. They wanted reassurance that they were not getting ripped off. Today, for some people, Zillow can provide that reassurance without the $500 expense.

- Lenders changed. You would think that they are nimble and adaptable to new opportunities. But where the rubber meets the road, it’s still people talking to customers, and underwriters signing off on loans. If loan officers aren’t aware of the guidelines, they’ll just order an appraisal. Often ordering an appraisal, because it can take so long, is just about one of the first things done in the process, regardless of whether it’s necessary. After all, it’s usually necessary, and it takes SO long (relatively speaking, of course). I have known lenders who required their loan officers to collect money for an appraisal to demonstrate customer commitment. But, lenders are starting to incorporate PIWs into their processes and take advantage of those opportunities to present a loan option with $500 less in costs.

Accurate AVMs are a necessary but not sufficient criteria for PIWs, and now that AVMs are much more accurate, PIWs are much more practical, and we’re seeing much higher adoption.

So now what should we expect going forward? The trend will likely continue. There’s a lot of room left in some of those categories for PIWs to grab a larger share.

If agencies are doing it, everyone else will. If there are lenders not using PIWs to the extent possible, they are going to be at a disadvantage.

Black Knight’s Cascade Improved

Black Knight just announced an addition to its ValuEdge Cascade. It will now include the CA Value AVM, developed by Collateral Analytics, which recently became a Black Knight company.

AVMetrics helped with the process, doing the independent testing used to optimize the cascade performance. Read more about it in their press release.